Ethical Use Of AI In Hiring

Reading time: 6 mins

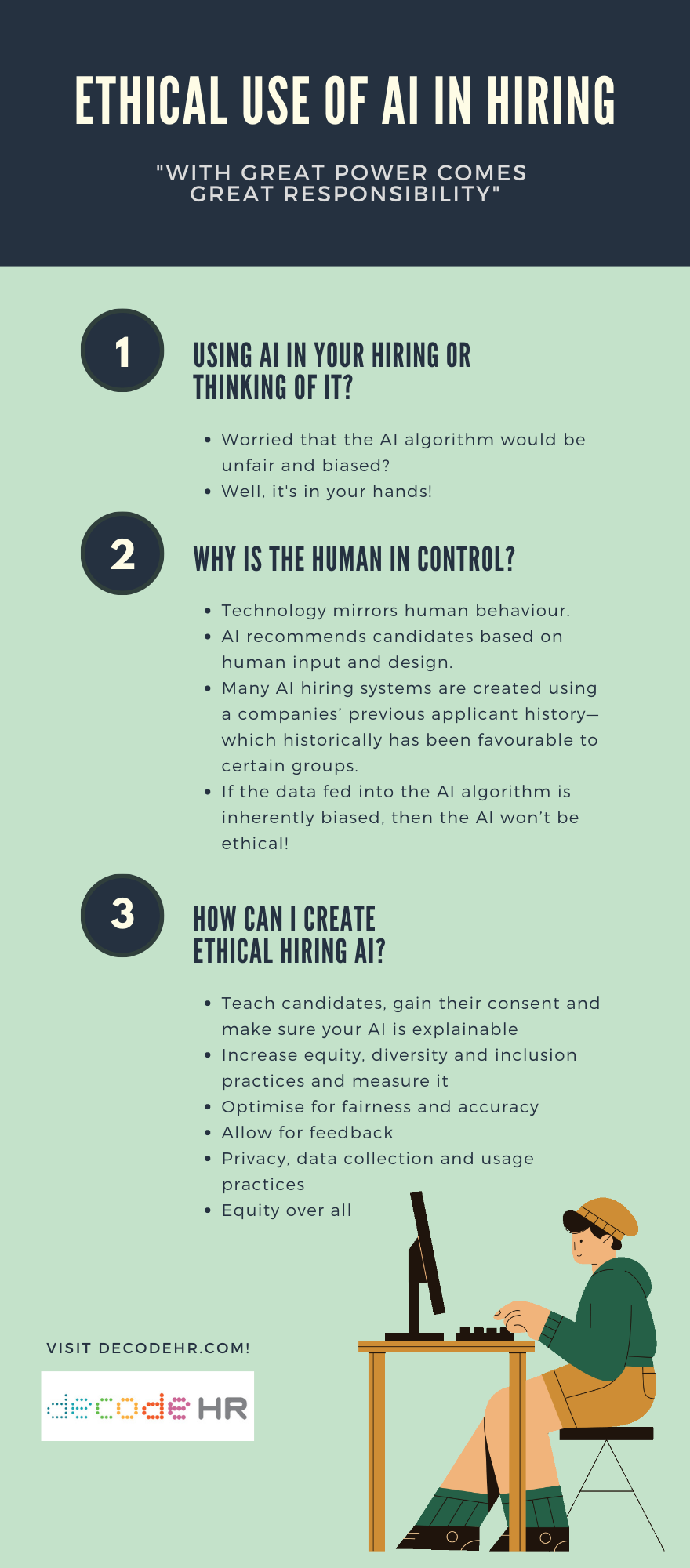

Technology in itself can never be ethical or unethical, because it mirrors human behaviour. If you use AI in your hiring or are thinking of it, here is how you can optimise the technology to be more equitable and inclusive.

In the battle for talent, Artificial Intelligence (AI) has shown it can play an important role in optimising how organisations source, rank and select potential candidates. Intelligent hiring solutions are also seen as a powerful way to reduce unconscious bias and prejudices that can permeate traditional human recruitment methods. But while intelligent solutions may claim to make hiring more inclusive and efficient, how ethical are they in practice? It’s been the subject of much recent debate and today we’ll be taking a deeper look at the future of ethical use of AI in hiring.

Infographic by DecodeHR.

SHARE THIS INFOGRAPHIC ON YOUR WEBSITE

Copy the code in the box below and paste it into your page editor to embed it on your website.

<p><a href='https://www.decodehr.com/news-and-insights/ethical-use-of-ai'><img src='https://static1.squarespace.com/static/5cfede4c6f0e8400015823cf/t/615462eb29487668823591a8/1632920300790/Ethical+Use+of+AI+in+Hiring+Infographic.png' alt='ETHICAL USE OF AI IN HIRING' width='500' border='0' /></a><p>Infographic by <a href="https://www.decodehr.com/" target="_blank">DecodeHR</a>.</p>

How are AI hiring solutions used now?

Major firms across the globe including Hilton, IKEA and Intel have already adopted machine learning and algorithmic decision-making in their recruitment to increase the accuracy of decisions, while saving time and money in finding candidates. Unlike traditional recruitment methods, such as face-to-face interviews, employee referrals and manual CV screening, AI can locate patterns unseen by the human eye. One of the most powerful capabilities realised through AI is the ability to provide hiring managers with more precise and efficient predictions of a candidate’s success through likely work behaviours and performance capabilities.

But in recent years, AI screening in recruitment has come under scrutiny for the ethics of certain algorithms and how they select, predict and favour candidates. This was infamously brought into the limelight after Amazon shut down their own proprietary AI hiring tool in 2017, after it was shown to discriminate against women. Concerns around fairness and transparency are therefore understandable, but a lot can change in four years in this highly innovative technology…so let’s take a look at the state of things.

Where does the controversy lie?

While it sounds like the start of a great sci-fi movie, hiring algorithms do not become biased because of some technical malfunction or because the computer develops a ‘mind of its own’. Numerous AI systems use real people as representatives for what success looks like in certain roles. This “training data set” often includes staff or managers who have been deemed as high performers. Based on the training set, AI systems process and compare the profiles of varying job applicants, and then provide recommendations based on how closely a candidate’s characteristics match those of the consummate employee.

This method can help find the best person for the role more efficiently and faster than before, however in this lies both promise and peril. This is because many AI hiring systems are created using companies’ previous applicant history— which historically has been favourable to certain groups; typically cisgender, white men (as seen in Amazon’s efforts). So if the training set and/or the data are biased, and algorithms are not sufficiently audited, AI will only aggravate the issue of bias in hiring and uniformity in organizations, rather than address the problem it claims to solve. This has not been an issue without remedy however, especially as more hiring data has become available over recent years. If the training set starts from a diverse pool of individuals, and demographically unbiased data is used to measure the people in it, the results can fundamentally reduce bias and enlarge diversity and inclusion better than humans ever could.

Technology mirrors human behaviour

What is most apparent is that many of the problems that stem and spark ethical debate in the form of AI are representative of some subjective human input or bias in design. Though opponents of AI debate that it is not better than traditional methods, they frequently forget that some of these systems are emulating our own behaviour. Algorithms can only be as good as the data it is provided, therefore it is the ethical responsibility of software developers to ensure a robust and diverse data set. The main thing we should all remember is that while we talk about ‘ethical AI’, technology can never really be ethical or unethical, it is only those humans who create and deploy it.

While there will always be critics of using AI for more objective hiring decisions, it must be said that all progress is good progress in the battle for equity, diversity and inclusion. Unfortunately, during the stage of determining talent or potential, many organizations still play it by ear. Recruiters can make split-second judgments when it comes to resume filtering, before deciding who to weed out. Hiring managers make fast judgments and label them as intuition, or can overlook factual data and hire based on perceived cultural fit. Human nature means we make decisions based on our own perception of education, age, gender and other factors that can have the unfortunate effect of narrowing the pipeline of marginalized groups. By making these types of screening decisions more objective, we inevitably see more equity and inclusion.

How can the use of AI in hiring be more ethical?

With great power comes great responsibility, and the last few years have seen a whole host of new frameworks and guidelines to help organizations make ethical use of AI, including in talent acquisition. Added to this are now well-established scientific principles for examining different assessment and selection tools to inspect the ethics of AI, and any evolving innovation. While most wouldn’t need to go to that level of scrutiny, there is general agreement around principles for ethical AI in hiring.

Teach candidates, gain their consent and make sure your AI is explainable

Organisations can ask prospective employees to opt in to provide their personal data to the company, knowing that it will be analysed, stored and used by AI systems for making HR-related decisions. Candidates should also be taught why being added to talent pools and having their skills and attributes analysed will be of benefit to them now and into the future, with firms ready to explain the who, what, why, and how.

AI systems also need to move away from black-box models to make their products explainable. If a candidate has a characteristic that is linked with success in a role, the organization needs to understand why that is the case, but also be able to explain the instrumental links. In short, AI systems should be able to predict and explain “causation”, not just find “correlation”.

Increase equity, diversity and inclusion practices and measure it

Increasing the diversity of candidates and data will ensure that traditionally overlooked or underrepresented candidates are able to be correctly evaluated. Some examples could include looking to add to talent pools from outside of usual sources, and continuing to bolster the objective measures that ignore identifying characteristics which could lead to prejudiced decisions. X0PA ROOM goes as far as to include the ability to completely mask an applicant’s identity using AI, both through powerful CV screening and anonymous one-way video interviews as a way to restrict all unconscious bias in the early stages of recruitment.

For all organisations it’s prudent to have measures put in place to determine the effect AI systems are having on increasing diversity and inclusion through recruitment, and to what end could these objectivity efforts be improved.

Optimise for fairness and accuracy

This one goes without saying but we will anyway. Any tool that is being used in making decisions around human capital should be constantly monitored and improved for fairness and accuracy, this is the only way in which recruiters can know they are acting ethically. Benchmarks must be set and competitors should always be looking to ensure that any new tool reduces errors, increases predictive accuracy and is less biased than alternatives.

One such way providers could do this is through developing open-source systems and third-party audits. Developers can adhere to increased levels of transparency by allowing others to audit the tools being used to analyse applicants. One solution is open-sourcing non-proprietary yet vital aspects of the AI technology the organization uses. For exclusive components, third-party audits conducted by credible experts in the field are a tool companies can use to showcase to the public how they are ameliorating bias.

Allow for feedback

If a candidate’s performance and behaviours are predicted by AI in order to showcase their potential or the reasons they aren’t selected, shouldn’t that person be aware of the findings? Just as a candidate receives feedback on why they weren’t right for a job after an interview, it follows that a candidate could receive the same analysis from AI much quicker and easier. This could help the individual to ensure they reach their own potential in future endeavours.

Privacy, data collection and usage practices

Finally, AI has to follow the same laws, as well as data collection and usage practices used in traditional hiring. AI systems should not use any confidential or personal data that should not be collected or included in a traditional hiring process for legal or ethical reasons.

Equity over all

If these areas continue to be prioritized as these helpful technologies progress, there is strong belief that ethical use of AI in hiring could hugely improve the makeup of organisations. AI has the power to reduce bias in hiring decisions, enhance equity and meritocracy, while making the association between employee success, talent and effort far greater than ever before. We just need to ensure we understand how to bank on the advantages that these new technologies offer, while minimizing potential risks. In the right hands and with the right intentions behind them, AI technologies are one of the most powerful ways to make talent acquisition more equitable and inclusive well into the future.

This post by Benjamin Poh Kai Yi and Matthew Terrell was created in collaboration with our AI partner, X0PA AI, which uses AI and Machine Learning to source, score and rank talent to identify the best-fit candidate for each company and role based on its patented algorithms.

Learn more: Guide on AI hiring practices